使用kubespray构建kubernetes集群

服务器资源

数量:3

CPU:2

内存:4

具体信息如下表:

| 系统类型 | IP地址 | 节点角色 | CPU | Memory | Hostname |

| :------: | :--------: | :-------: | :-----: | :---------: | :-----: |

| centos-7.5 | 192.168.31.92 | master | 2h | 4G | node-1 |

| centos-7.5 | 192.168.31.93 | master | 2h | 4G | node-2 |

| centos-7.5 | 192.168.31.94 | worker | 2h | 4G | node-3 |

系统设置

- 三台机器修改主机名

# 查看主机名

hostname

# 修改主机名

hostnamectl set-hostname <your_hostname>

- 关闭防火墙、selinux、swap,重置iptables

# 关闭selinux

setenforce 0

sed -i '/SELINUX/s/enforcing/disabled/' /etc/selinux/config

# 关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

# 设置iptables规则

iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat && iptables -P FORWARD ACCEPT

# 关闭swap

swapoff -a && free –h

# 关闭dnsmasq(否则可能导致容器无法解析域名)

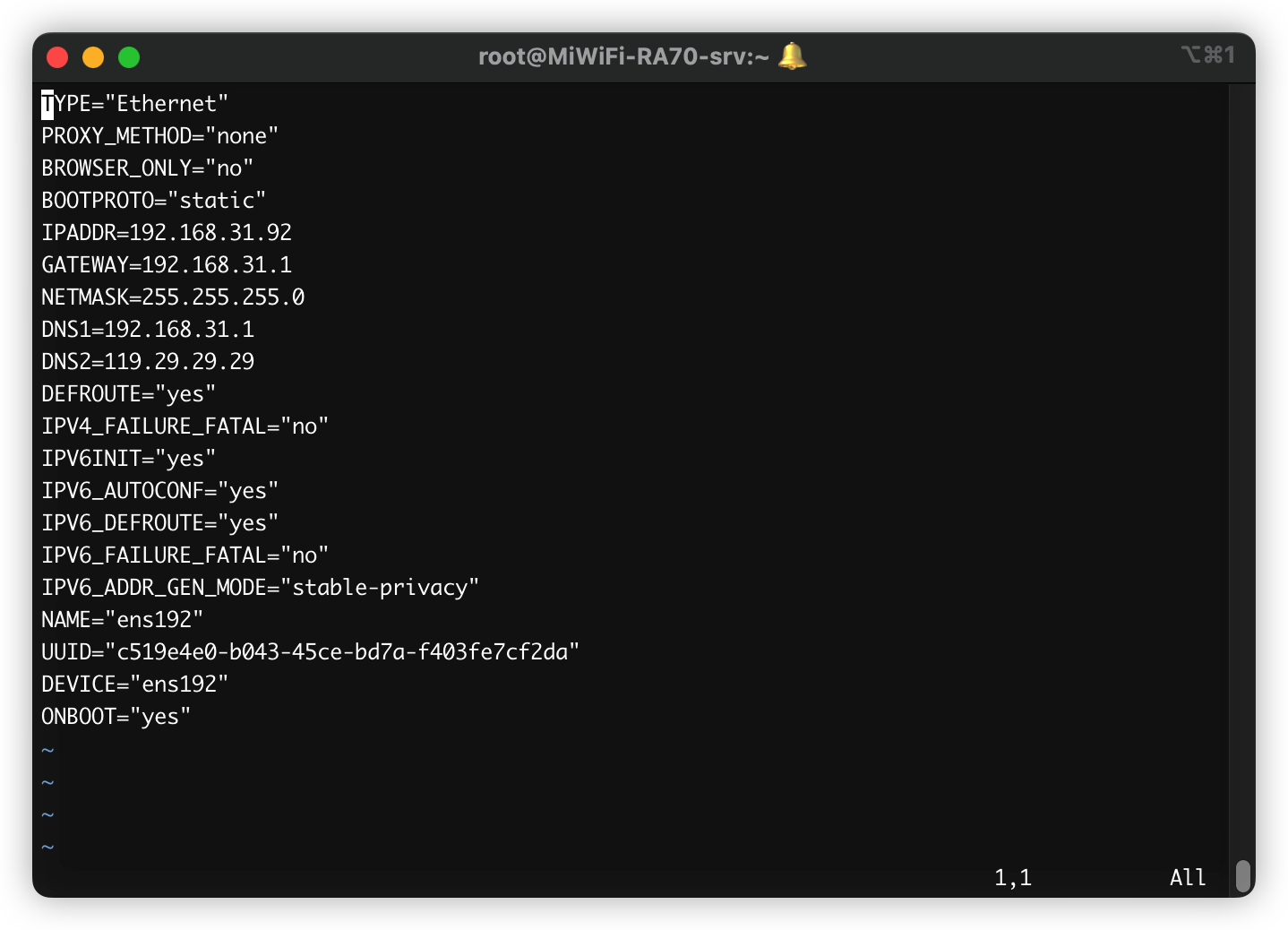

service dnsmasq stop && systemctl disable dnsmasq- 修改机器静态ip

如果是直接买的云服务,可以忽略这一步

# ifcfg-ens后边的数字可能不同环境不同机器是不一样的,要根据自己的来找一下

vim /etc/sysconfig/network-scripts/ifcfg-ens192

将BOOTPROTO的dhcp修改为static

同时增加静态ip信息:

IPADDR=192.168.31.92 #静态IP

GATEWAY=192.168.31.1 #默认网关

NETMASK=255.255.255.0 #子网掩码

DNS1=192.168.31.1 #DNS 配置

DNS2=119.29.29.29结果大致如下

重启network

service network restart如果是用ssh连接的,需要重新连接,修改ip后ssh会断开

同局域网内ip不能重复冲突,需要提前在路由器上看一下ip

- k8s参数设置

# 制作配置文件

cat > /etc/sysctl.d/kubernetes.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

vm.overcommit_memory = 1

EOF

# 生效文件

sysctl -p /etc/sysctl.d/kubernetes.conf4 移除docker相关软件包(可选)

yum remove -y docker*

rm -f /etc/docker/daemon.json- 重启机器,所有配置都配好后可以重启下机器:

reboot

使用kubespray部署集群

这部分只需要在一个 操作 节点执行,可以是集群中的一个节点,也可以是集群之外的节点。甚至可以是你自己的笔记本电脑。我们这里使用更普遍的集群中的任意一个linux节点。

- 配置免密

使操作节点可以免密登录到所有节点

# 1. 生成keygen(执行ssh-keygen,一路回车下去)

ssh-keygen

# 2. 查看并复制生成的pubkey

cat /root/.ssh/id_rsa.pub

# 3. 分别登陆到每个节点上,将pubkey写入/root/.ssh/authorized_keys

mkdir -p /root/.ssh

echo "<上一步骤复制的pubkey>" >> /root/.ssh/authorized_keys- 依赖软件下载、安装

# 安装基础软件

yum update

yum install -y epel-release python36 python36-pip git wget curl

# 下载kubespray源码

wget https://github.com/kubernetes-sigs/kubespray/archive/v2.15.0.tar.gz

# 解压缩

tar -xvf v2.2.15.0.tar.gz && cd kubespray-2.15.0

# 安装requirements

cat requirements.txt

pip3.6 install -r requirements.txt

## 如果install遇到问题可以先尝试升级pip

## pip3.6 install --upgrade pip- 生成配置

项目中有一个目录是集群的基础配置,示例配置在目录inventory/sample中,我们复制一份出来作为自己集群的配置

#copy一份demo配置,准备自定义

cp -rpf inventory/sample inventory/mycluster由于kubespray给我们准备了py脚本,可以直接根据环境变量自动生成配置文件,所以我们现在只需要设定好环境变量就可以啦

# 使用真实的hostname(否则会自动把你的hostname改成node1/node2...这种哦)

export USE_REAL_HOSTNAME=true

# 指定配置文件位置

export CONFIG_FILE=inventory/mycluster/hosts.yaml

# 定义ip列表(你的服务器内网ip地址列表,3台及以上,前两台默认为master节点)

declare -a IPS=(192.168.31.92 192.168.31.93 192.168.31.94)

# 生成配置文件

python3 contrib/inventory_builder/inventory.py ${IPS[@]}

- 个性化配置

配置文件都生成好了,虽然可以直接用,但并不能完全满足大家的个性化需求,比如用docker还是containerd?docker的工作目录是否用默认的/var/lib/docker?等等。当然默认的情况kubespray还会到google的官方仓库下载镜像、二进制文件,这个就需要你的服务器可以上外面的网,想上外网也需要修改一些配置。

部署阶段,是需要访问Google等国外服务,因此需要有一个http代理让服务器能够访问国外网络,我这里本身就有现成的代理服务,因此不再做赘述。我这边的http代理是:http://192.168.31.214:7890

# 定制化配置文件

# 1. 节点组织配置(这里可以调整每个节点的角色)

vim inventory/mycluster/hosts.yaml

# 2. containerd配置(教程使用containerd作为容器引擎)

vim inventory/mycluster/group_vars/all/containerd.yml

# 3. 全局配置(可以在这配置http(s)代理实现外网访问)

vim inventory/mycluster/group_vars/all/all.yml

# 4. k8s集群配置(包括设置容器运行时、svc网段、pod网段等)

vim inventory/mycluster/group_vars/k8s-cluster/k8s-cluster.yml

# 5. 修改etcd部署类型为host(默认是docker)

vim ./inventory/mycluster/group_vars/etcd.yml

# 6. 附加组件(ingress、dashboard等)

vim ./inventory/mycluster/group_vars/k8s-cluster/addons.yml

- 一键部署

部署:

# -vvvv会打印最详细的日志信息,建议开启

ansible-playbook -i inventory/mycluster/hosts.yaml -b cluster.yml -vvvv为了减少一键部署的时间,可以预先下载镜像:

vim kubespray-v2.15.0-images.sh

#!/bin/bash

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kubernetesui_metrics-scraper:v1.0.6 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kubernetesui_metrics-scraper:v1.0.6 docker.io/kubernetesui/metrics-scraper:v1.0.6

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/library_nginx:1.19 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/library_nginx:1.19 docker.io/library/nginx:1.19

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/coredns:1.7.0 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/coredns:1.7.0 k8s.gcr.io/coredns:1.7.0

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/dns_k8s-dns-node-cache:1.16.0 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/dns_k8s-dns-node-cache:1.16.0 k8s.gcr.io/dns/k8s-dns-node-cache:1.16.0

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/ingress-nginx_controller:v0.41.2 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/ingress-nginx_controller:v0.41.2 k8s.gcr.io/ingress-nginx/controller:v0.41.2

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-apiserver:v1.19.7 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-apiserver:v1.19.7 k8s.gcr.io/kube-apiserver:v1.19.7

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-controller-manager:v1.19.7 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-controller-manager:v1.19.7 k8s.gcr.io/kube-controller-manager:v1.19.7

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-proxy:v1.19.7 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-proxy:v1.19.7 k8s.gcr.io/kube-proxy:v1.19.7

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-scheduler:v1.19.7 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-scheduler:v1.19.7 k8s.gcr.io/kube-scheduler:v1.19.7

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/pause:3.2 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/pause:3.2 k8s.gcr.io/pause:3.2

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/pause:3.3 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/pause:3.3 k8s.gcr.io/pause:3.3

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kubernetesui_dashboard-amd64:v2.1.0 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kubernetesui_dashboard-amd64:v2.1.0 docker.io/kubernetesui/dashboard-amd64:v2.1.0

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/cpa_cluster-proportional-autoscaler-amd64:1.8.3 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/cpa_cluster-proportional-autoscaler-amd64:1.8.3 k8s.gcr.io/cpa/cluster-proportional-autoscaler-amd64:1.8.3

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_cni:v3.16.5 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_cni:v3.16.5 quay.io/calico/cni:v3.16.5

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_kube-controllers:v3.16.5 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_kube-controllers:v3.16.5 quay.io/calico/kube-controllers:v3.16.5

crictl pull registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_node:v3.16.5 || exit 1

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_node:v3.16.5 quay.io/calico/node:v3.16.5

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kubernetesui_metrics-scraper:v1.0.6

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/library_nginx:1.19

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/coredns:1.7.0

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/dns_k8s-dns-node-cache:1.16.0

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/ingress-nginx_controller:v0.41.2

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-apiserver:v1.19.7

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-controller-manager:v1.19.7

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-proxy:v1.19.7

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kube-scheduler:v1.19.7

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/pause:3.2

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/pause:3.3

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/kubernetesui_dashboard-amd64:v2.1.0

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/cpa_cluster-proportional-autoscaler-amd64:1.8.3

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_cni:v3.16.5

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_kube-controllers:v3.16.5

ctr -n k8s.io i rm registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/calico_node:v3.16.5

chmod +x ./kubespray-v2.15.0-images.sh

./kubespray-v2.15.0-images.sh- 清理代理设置

我这边之前用的代理端口是7890,所以直接gulp查找下7890就可以了,该操作需要在所有节点机器上都操作

# 把grep出来的代理配置手动删除即可

grep 7890 -r /etc/yum*

删除docker的http代理(在每个节点执行)

rm -f /etc/systemd/system/containerd.service.d/http-proxy.conf

systemctl daemon-reload

systemctl restart containerd